The introduction of Amazon Q features a powerful generative artificial intelligence (AI) assistant designed to analyze business trends, assist in software development, and maximize the potential of a company’s internal data. Amazon Q connects with a diverse range of AWS services. One of Amazon Q’s flagship services is Amazon Q Business. Another key Amazon Q integration is with the powerful business intelligence (BI) tool, Amazon QuickSight. In this post, we will delineate how these two specific AWS services are enhanced by their integration with Amazon Q.

What is Amazon Q?

Let us first understand Amazon Q, the foundational layer that integrates with both Amazon Q Business and Amazon Q in QuickSight.

Amazon Q is a platform developed by AWS that harnesses generative artificial intelligence (AI) to enhance various business processes. Amazon Q is capable of generating code, creating tests, debugging code, and has multistep planning and reasoning capabilities that make it easier for employees to get answers to questions across the entirety of business data—such as company policies, compliance requirements, product offerings, performance metrics, code bases, employee information, and more—by connecting to enterprise data repositories that summarize the data logically, analyze trends, and facilitate dialogue regarding the information.

Amazon Q Business

Amazon Q Business is a fully managed, generative-AI powered assistant that you can configure to answer questions, provide summaries, generate content, and complete tasks designed with enterprise-level security in mind. It allows end users to receive immediate, permissions-aware responses from enterprise data sources with citations, for use cases such as IT, HR, and benefits help desks. Amazon Q Business also helps streamline tasks and expedite decision-making with no data exposure to public models. You can use Amazon Q Business to create and share task automation applications, or perform routine actions like submitting time-off requests and sending meeting invites.

Amazon Q in QuickSight

Amazon QuickSight offers a comprehensive range of features comparable to those of leading business intelligence tools. However, the integration of Amazon Q provides a distinct competitive advantage. Having Amazon Q as the generative AI assistant on top of Amazon QuickSight simplifies data exploration for business users. With the new Q&A experience in Amazon Q in QuickSight, users receive multi-visual responses complete with data previews, empowering them to move beyond the mundane and manual processes of traditional dashboard insights.

This functionality streamlines the process of presenting and generating data analyses and reports, facilitating more effective decision-making and strategic initiatives. Stakeholders do not need to possess in-depth knowledge of the data query language or the dashboarding tool to create specific dashboards. Instead, they can simply ask Amazon Q for their desired output, and it will efficiently sift through the data, select the most suitable and presentable visual, and generate it in Amazon QuickSight in a matter of seconds.

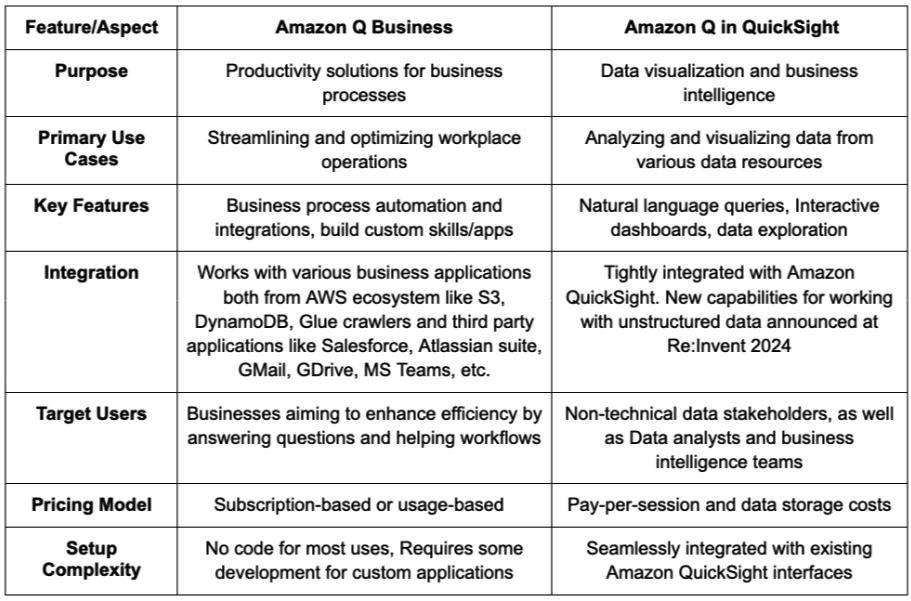

Let’s summarize the key differences:

In summary, Amazon Q Business and Amazon Q in QuickSight serve distinct but complementary roles within the AWS ecosystem. Understanding their key differences allows organizations to leverage these tools effectively. While both are powered by generative AI capabilities through Amazon Q, Amazon Q Business enhances productivity in business tasks by serving as an excellent AI assistant, whereas Amazon Q in QuickSight is used for advanced data visualization, rapid reporting and dashboarding, and analysis across various data sources.

Here are a couple links to the latest AWS announcements:

Amazon Q Business Insights Databases Data Warehouses Preview

Query Structured Data From Amazon Q Business Using Amazon QuickSight Integration

M